Cruncher's MW Concerns

Message boards :

Number crunching :

Cruncher's MW Concerns

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · Next

| Author | Message |

|---|---|

The Gas Giant The Gas GiantSend message Joined: 24 Dec 07 Posts: 1947 Credit: 240,884,648 RAC: 0 |

Cruncher's MW Concerns. Lack of cached wu's on GPUs. With a wu taking 55 seconds or less, for my machine with 2 * GPUs in my quady, that gives me just under 15 minutes of wu's cached. Be nice to have 30 to 60 wu's cached per GPU. |

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

Thank you. The current workunits should all take pretty much the same time.

|

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

The only thing I missing here is information, a short briefing about what happening in front page or a mail to top users whats going on, then they probably post a message to some shouts or team site. MV is now my second boinc project because if collatz is running, it take all gpu ignoring MW/collatz boinc share, fix that please. You're probably going to have to bare with me for the next couple weeks while I finish writing my PhD dissertation. After that I'll have a lot more time to watch (and update) the server and keep you guys informed.

|

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

Cruncher's MW Concerns. Sadly this has been a problem with the project since it's inception. Due to what we're doing here our WUs need a somewhat faster turn around time, so chances are you're not going to be able to queue up too much work. Also, with the server in it's current struggling state, letting people have more WUs in their queue only slows it down farther, so it's not something we can really change.

|

The Gas Giant The Gas GiantSend message Joined: 24 Dec 07 Posts: 1947 Credit: 240,884,648 RAC: 0 |

Cruncher's MW Concerns. I was talking about the future.....once the new hardware is operational. |

|

Send message Joined: 21 Aug 08 Posts: 625 Credit: 558,425 RAC: 0 |

Cruncher's MW Concerns. Wouldn't it be nice to just not have these continual struggles? The answer isn't more of the same size tasks you all get now. The answer still will be longer running tasks for GPUs. It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... |

GalaxyIce GalaxyIceSend message Joined: 6 Apr 08 Posts: 2018 Credit: 100,142,856 RAC: 0 |

It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... It really makes me wonder sometimes. Just two posts below Travis says "Due to what we're doing here our WUs need a somewhat faster turn around time" How do your 100 times longer WUs make for faster turn around time? |

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... They would then take an hour or so like the cpu wus do. How is that not better? Then each gpu would have a days work or more instead of 15 min. Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

GalaxyIce GalaxyIceSend message Joined: 6 Apr 08 Posts: 2018 Credit: 100,142,856 RAC: 0 |

It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... I should imagine that there is a scientific/research reason for the length of a WU. It's like trying to suggest stuffing a hundred days food into your pet dog on day one just to make life more convenient for you. |

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... Travis already said and promised gpu wus 100x longer months ago. He said they had more complex data that could be crunched, so yes it would be scientific. Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

GalaxyIce GalaxyIceSend message Joined: 6 Apr 08 Posts: 2018 Credit: 100,142,856 RAC: 0 |

It doesn't matter to me if they get the same credit per second as they do now, they just need to be on the order of 100 times longer... OK, I missed that. It would certainly make a radical difference. But given the reality of rate of progress here in MW and the possibilities becoming a reality, Travis may as well say that this project is aiming to put the first donkey on Mars by the year 2012. Not meant to be a criticism of Travis, I'm just saying we can make the best of what we have and leave the pie in the sky for when it happens. |

|

Send message Joined: 21 Aug 08 Posts: 625 Credit: 558,425 RAC: 0 |

Yes, it indeed would... That was the whole premise behind MW_GPU. The current tasks are still within the range of CPUs. If they moved you all off to the other project and did the more complex work, they could be getting a LOT more done. If they were concerned about faster turnaround here, they could give you all the 3-stream (3s) tasks as well, leaving the 1 and 2-stream tasks here. But given the reality of rate of progress here in MW and the possibilities becoming a reality, Travis may as well say that this project is aiming to put the first donkey on Mars by the year 2012. Not meant to be a criticism of Travis, I'm just saying we can make the best of what we have and leave the pie in the sky for when it happens. So, here's the choice... If the new hardware makes it to where site and work availability are at manageable levels for the project, should the project keep it that way, or increase the workload to yet again get to the point that we're at now, thus requiring even more new hardware? If the project is happy with not having to babysit the servers as much and happy with the rate that the research is happening, then what exactly gives you the right to demand they do otherwise? Yes, I know that you are providing a service and you can stop providing services at any time that you choose, but to me that is not "being a team player". If the new server goes in and it makes their life easier and they want to keep it that way for a while, then you should be respectful of that. |

Bymark BymarkSend message Joined: 6 Mar 09 Posts: 51 Credit: 492,109,133 RAC: 0 |

The only thing I missing here is information, a short briefing about what happening in front page or a mail to top users whats going on, then they probably post a message to some shouts or team site. MV is now my second boinc project because if collatz is running, it take all gpu ignoring MW/collatz boinc share, fix that please. Thanks for this weekend, briefing was good on front page, server almost working all this time, and I happy to crunch here. Thanks to Travis and all the admins here! And we are still needing more admins over the pool to brief the crunchers. Good luck Travis on your phd thesis, but you really don't need it, all is going to go fine. |

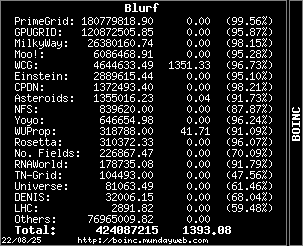

Blurf BlurfSend message Joined: 13 Mar 08 Posts: 804 Credit: 26,380,161 RAC: 0 |

Front Page Updates:

Server Crash - Part 5 Server Crash - Part 4 Server Crash - Part 3

|

|

Send message Joined: 11 Sep 08 Posts: 22 Credit: 9,174,947 RAC: 7,011 |

Cruncher's MW Concerns. Well, on the first point, that doesn't entirely address the situation at hand. With the CPU renderer that can take 1.5 hours, 2 hours, or so a WU; that can take substantially less time on a GPU.... Fast turn around is one thing, but expecting results back every 30 seconds (12 second completion times) would hardly reduce the load on the servers. At least on the networking end, it would mean chatty boxes that are constantly hammering it with requests for new work, and constant uploads/reporting results. All these additional requests then have to be handled, and as they occur more often... In fact a larger queue for these people, with a slightly more aggressive backoff algorithm could help some types of resource load problems. The problem is, that addressing it, would require 1. estimating a devices average completion time, and then 2. instead of restricting it by x number WUs (assuming all computing devices are equal), restrict it by y-time factor where faster devices can get a larger queue. Only problem that introduces is that if one's looking at a single BOINC client, it could pull both WUs for the CPU as well as the GPU, and if it comes up as a single computer ID that might get hairy for saying "OK, the number of GPU WUs allowed to be uncompleted at a time should be one thing, the number of CPU WUs that much smaller. Unless there's a way to distinguish between them from the scheduler's standpoint, which would require more info then a simple computer ID, with whatever benchmark stats got uploaded assuming one computation device of equal perf. Allowing 30 minutes, or 1 hr of GPU tasks to exist on a box at a time, wouldn't delay return times greater then allowing one task to be completed on a CPU that takes longer then that to crunch, even exclusively. Also, the CPU can run a greater number of total projects (scheduled runtime), then projects that are GPU only. But it does add complexities to the mix; if one's goal is to get them in said reasonable time frame; when the WU completion time can very between seconds on the one hand, vs hours on the other. And both present rather different problems (results that aren't returned for days on the one hand, but on the other results getting reported so often with a constant stream of downloads, that the server's being practically hammered with constant uploads/downloads, and requests in unrelenting fashion). There's almost a balance to be had between faster turn around (and the attendent faster WU generation) and server load issues that could be introduced if clients are having to contact it all the time, without some form of break before the next demand/request is placed upon the server via the network pipe, at least looking at the face of it. But with such a wild variation in completion times, and given a single box could have 1 of each device turning them in, meh... The single box would simply have no single constant wrt performance, due to the variation between the devices doing the tasks; even if the time to completion is what one really wants to get at, ignoring the differences in speed on varying devices which this introduces.. |

|

Send message Joined: 25 Aug 09 Posts: 12 Credit: 179,143,357 RAC: 0 |

Cruncher's MW Concerns. Yeah, what this dude said. I think someone's taking them for a ride with these "vibrating hard drives".....Seriously...construction?? 2 servers, 1 for CPU tasks - 1 for GPU tasks...which are formulated differently to serve up the data to the project in the required times (as desired by the project_ - but keep everyone else happy with wu times, and reduce server load! (my suggestion would be no VM'ing them either - give them healthy CPU's and platforms to work with in addition to the suggestions above). Crunchy crunchy, pair of dragons now... 4890 joined the fam with my unlocked 550BE 4870 downstairs on the 940 BE heating my living room. Don't let Collatz get all the credits. Plus, I just got some dudes to join with some heavy hitting equiptment (295 and a 5870)...and then 2 days later we go for another huge outtage... All my BOINC lobbying takes hits ...(I got tough skin, don't worry) But RPI team still the best in the business!! Such efficiency on these ATI cards are the envy of all the distributed computing projects....keep up the good work and thanks. PS Good luck on your dissertation!

|

Bymark BymarkSend message Joined: 6 Mar 09 Posts: 51 Credit: 492,109,133 RAC: 0 |

I really don't know how the us mail works, but the hard disk here in Europe had arrived days ago. I am not in hurry of crunch MW, just ponder. |

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

I really don't know how the us mail works, but the hard disk here in Europe had arrived days ago. I am not in hurry of crunch MW, just ponder. The US postal service varies from great to not getting your mail. (as in never comes). I would bet the parts would come UPS or Fed-ex (both package delivery services, as USPS mainly delivers letters and envelopes) Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

I really don't know how the us mail works, but the hard disk here in Europe had arrived days ago. I am not in hurry of crunch MW, just ponder. The hard drives are here, they just need to be installed. I'm going to bug labstaff tomorrow to hopefully get things working again.

|

Dan T. Morris Dan T. MorrisSend message Joined: 17 Mar 08 Posts: 165 Credit: 410,228,216 RAC: 0 |

That you Travis:) I am having MW withdrawals. And its getting to be painful:) Any way, thank you for the update. Dan |

©2024 Astroinformatics Group