GPUs with different compute capabilities in the same machine

Message boards :

Number crunching :

GPUs with different compute capabilities in the same machine

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 4 Apr 08 Posts: 10 Credit: 32,120,887 RAC: 0 |

I have a box with 2x GTX260 and I recently added a 9800GT. The 9800GT, with compute capability 1.1, should not crunch MW WUs. But it does indeed, and it looks like it is slowing down one of the 260s. Does anybody know how I can prevent the 9800GT from getting MW-WUs and use it only for other projects? BTW when crunching other projects the 9800GT doesn't seem to affect the performance of the 260s. 29.11.2009 19:50:32 Processor: 4 GenuineIntel Intel(R) Core(TM)2 Quad CPU Q9550 @ 2.83GHz [x86 Family 6 Model 23 Stepping 10] 29.11.2009 19:50:32 Processor: 6.00 MB cache 29.11.2009 19:50:32 Processor features: fpu tsc pae nx sse sse2 mmx 29.11.2009 19:50:32 OS: Microsoft Windows XP: Professional x86 Edition, Service Pack 3, (05.01.2600.00) 29.11.2009 19:50:32 Memory: 2.25 GB physical, 4.09 GB virtual 29.11.2009 19:50:32 Disk: 107.42 GB total, 55.52 GB free 29.11.2009 19:50:32 Local time is UTC +1 hours 29.11.2009 19:50:35 NVIDIA GPU 0: GeForce GTX 260 (driver version 19562, CUDA version 3000, compute capability 1.3, 896MB, 633 GFLOPS peak) 29.11.2009 19:50:35 NVIDIA GPU 1: GeForce 9800 GT (driver version 19562, CUDA version 3000, compute capability 1.1, 512MB, 308 GFLOPS peak) 29.11.2009 19:50:35 NVIDIA GPU 2: GeForce GTX 260 (driver version 19562, CUDA version 3000, compute capability 1.3, 896MB, 633 GFLOPS peak)

|

The Gas Giant The Gas GiantSend message Joined: 24 Dec 07 Posts: 1947 Credit: 240,884,648 RAC: 0 |

read the readme that comes with the optimised app and set up a app_info.xml file with the exclude gpu option. Exclusion of GPUs: x (default none) If a certain GPU should not be used by the app, one can exclude it. The GPU number has to be in the range 0..31. The first GPU in the system has the number 0. This option can be used multiple times. Per default, all double precision capable ATI GPUs are used. You don't have to exclude cards supporting only single precision, it is done automatically. Example: <cmdline>x1 x2</cmdline> The app will not use the second and the third GPU in the system. |

JerWA JerWASend message Joined: 22 Jun 09 Posts: 52 Credit: 74,110,876 RAC: 0 |

Problem is, that's not working. This is the first time I've been back to MW since swapping cards around and I have an HD4890 and an HD4350 in this system and BOTH picked up MW work! Oddly, both WUs are progressing at about the same speed, which is definitely not right. Both say they completed successfully, 5m 12 seconds exactly for both. What's the crunch time now on a WU for an HD4890, ballpark? 12/3/2009 7:07:35 PM ATI GPU 0: ATI Radeon HD 4700/4800 (RV740/RV770) (CAL version 1.4.467, 1024MB, 1536 GFLOPS peak) 12/3/2009 7:07:35 PM ATI GPU 1: ATI Radeon HD 4350/4550 (R710) (CAL version 1.4.467, 512MB, 112 GFLOPS peak) Ahhh, I see what it's doing. It's automagically running both WUs on the HD4890. Shouldn't it just be making one wait instead of running both simultaneously? Especially since the client says it's running on ATI device 0 and ATI device 1, but in reality it's not: http://milkyway.cs.rpi.edu/milkyway/result.php?resultid=7468346 "running" on the 4350 http://milkyway.cs.rpi.edu/milkyway/result.php?resultid=7468276 running on the 4890  Edit: FWIW, adding x1 to the command line did nothing. It's still running 2 WUs at a time, 1 per GPU, even though 1 of the GPUs doesn't support it and is shunting the work to the other GPU.

|

verstapp verstappSend message Joined: 26 Jan 09 Posts: 589 Credit: 497,834,261 RAC: 0 |

|

Paul D. Buck Paul D. BuckSend message Joined: 12 Apr 08 Posts: 621 Credit: 161,934,067 RAC: 0 |

Problem is, that's not working. This is the first time I've been back to MW since swapping cards around and I have an HD4890 and an HD4350 in this system and BOTH picked up MW work! Oddly, both WUs are progressing at about the same speed, which is definitely not right. Both say they completed successfully, 5m 12 seconds exactly for both. This is an artifact of the bad implementation of GPU computing where UCB ignored the issue of asymmetric cards leaving only the option of running on the best card in the cases of mismatch. These issues are raised as I can and there are noises that UCB may consider solving this. Sadly the most public statements are that the option is only to run ALIKE GPUs in the system instead of facing the reality that this is not very practical for any number of reasons ... primary among them financial ... Most people simply cannot afford to upgrade all cards in single swell foops and are stuck with piecemeal changes with one card at a time. In a two slot configuration they should throw away a resource just because UCB is lazy? Anyway ... that does not really address your situation. I really strongly suggest that you do drop a note onto BOINC Alpha mailing list (or Dev) and explain your situation. If we can get more people to do this then UCB will have to (eventually) recognize that it is a common situation that affects more people than just me .... |

verstapp verstappSend message Joined: 26 Jan 09 Posts: 589 Credit: 497,834,261 RAC: 0 |

|

![View the profile of [KWSN]John Galt 007 Profile](https://milkyway.cs.rpi.edu/milkyway/img/head_20.png) [KWSN]John Galt 007 [KWSN]John Galt 007Send message Joined: 12 Dec 08 Posts: 56 Credit: 269,889,439 RAC: 0 |

Problem is, that's not working. This is the first time I've been back to MW since swapping cards around and I have an HD4890 and an HD4350 in this system and BOTH picked up MW work! Oddly, both WUs are progressing at about the same speed, which is definitely not right. Both say they completed successfully, 5m 12 seconds exactly for both. My 4890 is running them in about 160s...1 WU at a time...http://milkyway.cs.rpi.edu/milkyway/show_host_detail.php?hostid=40768 Click to help Seti City.

|

JerWA JerWASend message Joined: 22 Jun 09 Posts: 52 Credit: 74,110,876 RAC: 0 |

Unfortunately the cmdline x1 doesn't work with an "ATI aware BOINC client", and the client is not excluding the single precision card as advertised, so I'm left with it force feeding 2 WUs at once to a single card while the manager thinks both cards are running. Grr. Any hopes of making the x1 hard coded again so that it will smack even the new manager and make it leave the card alone? Paul D, The 4350 is only in this machine to crunch at this point, and at Collatz they both happily run together (though the manager queues work evenly, i.e. max WUs for both cards, despite the fact the 4350 would take like a week to do that many WUs). I don't know anything about the mailing lists, despite having been a user since before there was such a thing as BOINC. I know the GPU stuff is all pretty fresh, I'm just happy it's mostly working lol.

|

kashi kashiSend message Joined: 30 Dec 07 Posts: 311 Credit: 149,490,184 RAC: 0 |

Isn't this done with <ignore_ati_dev>n</ignore_ati_dev> in a cc_config.xml file since BOINC 6.10.19? For example: Disable first ATI GPU: <cc_config> <options> <ignore_ati_dev>0</ignore_ati_dev> </options> </cc_config> Disable second ATI GPU: <cc_config> <options> <ignore_ati_dev>1</ignore_ati_dev> </options> </cc_config> You would need to remove or disable the cc_config.xml file though when you wanted to crunch Collatz on your 4350. I have never used this option so I don't know how well it works. |

JerWA JerWASend message Joined: 22 Jun 09 Posts: 52 Credit: 74,110,876 RAC: 0 |

6.10.19 is not yet "public" for Windows, and I try to avoid the dev apps unless something is broken big. Crunching a bit slower than it should because it's doubling up WUs, eh, I'll live with it. Having to kill the manager every time I want to switch GPU projects would be a PITA. And I'd surely forget it, and wonder why only 1 GPU was crunching and spend 2 hrs fighting with it before remembering hehe.

|

uBronan uBronanSend message Joined: 9 Feb 09 Posts: 166 Credit: 27,520,813 RAC: 0 |

lol the the only thing i can think off is that it act like my hd3200 and 4770 card they are both auto magic set as x-crossfire thats probably your case also HD3200 seems is absolutely not useable for crunching but now is somehow helping the faster card, i have no clue how but its running at 100% all the time :D Its new, its relative fast... my new bicycle |

|

Send message Joined: 20 Sep 08 Posts: 1391 Credit: 203,563,566 RAC: 0 |

I only have 4870s, but they're doing the long WUs in about 188sec. My 4890 is running them in about 160s...1 WU at a time. I just got my first 4870 up and running. Results as follows 3850 - 631 secs for 213 cr 4850 - 211 secs for 213 cr 4870 - 186 secs for 213 cr I think the 4850's are better value for money as it costs a 1/3 as much again for just 12% increase with a 4870. Don't drink water, that's the stuff that rusts pipes |

kashi kashiSend message Joined: 30 Dec 07 Posts: 311 Credit: 149,490,184 RAC: 0 |

6.10.19 is not yet "public" for Windows, and I try to avoid the dev apps unless something is broken big. Crunching a bit slower than it should because it's doubling up WUs, eh, I'll live with it. Having to kill the manager every time I want to switch GPU projects would be a PITA. And I'd surely forget it, and wonder why only 1 GPU was crunching and spend 2 hrs fighting with it before remembering hehe. I don't even know what the current "public" release version is. I started using the development versions to support Collatz ATI a while ago and never stopped. Yes using cc_config.xml is not ideal, I have a shortcut to cc_config.xml on my desktop and used it to change the number of BOINC cores when I ran certain projects. There was no trouble doing it, it was easy, but I often used to forget. Didn't need to shut down and restart BOINC though, just change the file and click Advanced > Read config file. |

JerWA JerWASend message Joined: 22 Jun 09 Posts: 52 Credit: 74,110,876 RAC: 0 |

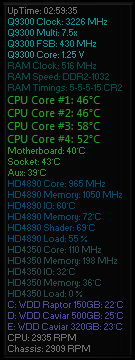

12/5/2009 5:46:43 PM Config: ignoring ATI GPU 1 12/5/2009 5:46:43 PM ATI GPU 0: ATI Radeon HD 4700/4800 (RV740/RV770) (CAL version 1.4.467, 1024MB, 1536 GFLOPS peak) 12/5/2009 5:46:43 PM ATI GPU 1 (ignored by config): ATI Radeon HD 4350/4550 (R710) (CAL version 1.4.467, 512MB, 112 GFLOPS peak) 12/5/2009 5:46:44 PM Version change (6.10.18 -> 6.10.21) It's now running just 1 at a time, danke. Would still prefer if the app behaved as the documentation says it does. Or perhaps the manager, since I think the app is doing what it should mostly (it's saying hey, I can't run on this!) and then the manager is shunting it to the other card automagically rather than just making the WU wait. As an aside, MW cranks on my cards way harder than Collatz does. Interestingly CCC reports the same load, in the mid 90% range, but temps on the card are so hot I had to bump the fan up from 45% to 75% to keep them under 80C. That's with 16C ambients, so I imagine despite the upgraded cooler and uber airflow in my case this setup won't work in the summer. Food for thought. At any rate, looks like my HD4890 is taking 150 seconds for 213 credits now. Cheers. Love this lil addon (and it figures I just happened to cap it on the downswing of a WU lol):

|

|

Send message Joined: 4 Apr 08 Posts: 10 Credit: 32,120,887 RAC: 0 |

Well thanks... but since those are NVIDIA GPUS I don't use the optimized app and therefore I don't have an app_info file. You mean I should set up such a file and adapt it to the stock app?

|

kashi kashiSend message Joined: 30 Dec 07 Posts: 311 Credit: 149,490,184 RAC: 0 |

Well thanks... but since those are NVIDIA GPUS I don't use the optimized app and therefore I don't have an app_info file. For NVIDIA cards you can use <ignore_cuda_dev>n</ignore_cuda_dev> in a cc_config.xml file. Requires BOINC 6.10.19 and above. For example: Disable first NVIDIA GPU: <cc_config> <options> <ignore_cuda_dev>0</ignore_cuda_dev> </options> </cc_config> Disable second NVIDIA GPU: <cc_config> <options> <ignore_cuda_dev>1</ignore_cuda_dev> </options> </cc_config> Disable third NVIDIA GPU: <cc_config> <options> <ignore_cuda_dev>2</ignore_cuda_dev> </options> </cc_config> This is possibly not suitable for you since you would need to remove or disable the cc_config.xml file when you wanted to crunch other projects on your GPUs and also use your 9800GT to do so. |

kashi kashiSend message Joined: 30 Dec 07 Posts: 311 Credit: 149,490,184 RAC: 0 |

Glad it is working for you. Yes MilkyWay sure runs hotter than Collatz, during the hot weather here recently I had to use w1.2 on my 4890 to prevent the temp exceeding 90°C. That card has a Accelero Twin Turbo Pro cooler and the cooling is OK but nothing brilliant, beautifully quiet though, virtually silent. A few days ago I replaced it with a 5870 Vapor-X and that cools really well and I can run the default w1 and even f20 just for spice, although I don't think adjusting the f parameter makes much if any difference for me on the current length tasks. Currently at 890 MHz and temp never exceeds 70°C so far, but the fan is quite noisy with my open case. It's not super loud noise, but the whining tone like a vacuum cleaner makes it penetrating when I am abed. Might have to buy a newer case with better cooling so I can put the sides on. Perhaps one of those Silverstone FT-02 would be suitable with the vertical layout for GPU heat and foam lining for the noise. It's only got 7 slots though so that may limit future expansion a bit. Expensive too, ah well shrouds don't have pockets. It doesn't show with a 4890, but with a 5870 GPU-Z shows the power draw. At 100% GPU load Collatz shows VDDC current of 41-43 amps while MilkyWay shows 61-63 amps, so MilkyWay is certainly giving the cards a more rigorous workout. That little add-on does look handy, that is Everest according to the image name? I wouldn't mind something that shows the GPU temp, because ATI Tray Tools is incompatible with my 5870 and caused only striped lines to appear after booting to the desktop. That drama caused a few anxious moments because I thought the card was faulty and I knew it would be difficult to get a replacement for a long time here. The little screws on the top of the bracket made it very difficult to fit also, so it's a tiny bit lopsided but is such a corker at pumping out the WUs that all is now forgiven. :) |

JerWA JerWASend message Joined: 22 Jun 09 Posts: 52 Credit: 74,110,876 RAC: 0 |

Yes, it's a Vista gadget from Everest Ultimate. Not a free solution, but there may be some out there since it's just reading the sensors like any app could. It's got quite a few more things you can choose, that's just my particular setup. I've actually upgraded to a newer version recently that shows another heat sensor on the GPUs, as well as GPU fan speed. The other handy thing that Everest lets you do is set alarms, and actions. I have it set to shut the system off if any of the GPU temps hit 100C, and if any of the CPU cores hit 75C. Just in case a fan dies or something while I'm not here. Much cheaper to lose a few hours of crunching than to have a component burn up!

|

©2026 Astroinformatics Group