Host with WAY too many tasks.

Message boards :

Number crunching :

Host with WAY too many tasks.

Message board moderation

Previous · 1 · 2 · 3 · Next

| Author | Message |

|---|---|

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

I believe they are all the same system being they all have the exact specs Yes it seems so to me. Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

Beyond BeyondSend message Joined: 15 Jul 08 Posts: 383 Credit: 729,293,740 RAC: 0 |

I believe they are all the same system being they all have the exact specs As Ba pointed out you can see exactly what he's doing here: http://www.overclock.net/blogs/blox/2050-bloxcache-boinc-caching-batch-file-initialisation.html He's trying to get past the WU limitation problem on GPUs. We've suggested better alternatives. Hopefully they're being considered as it would be the best way to keep this sort of thing from happening. |

|

Send message Joined: 6 May 09 Posts: 217 Credit: 6,856,375 RAC: 0 |

Host: http://milkyway.cs.rpi.edu/milkyway/show_host_detail.php?hostid=247482 Shows declining tasks, and some are now validating - I'll put him on 'probation' and see if the number of tasks keeps going down. If not, I'll throw a ban his way. We have issues a notice on the News page: http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=2179#45739 asking users to stop trying to artificially increase their caches, and why they may be hurting the project. If they don't stop soon, I will start throwing bans around. Cheers, Matthew |

|

Send message Joined: 21 Nov 09 Posts: 49 Credit: 20,942,758 RAC: 0 |

Matt, It's great that you guys posted that, but do you have a plan to check for / deal with people who are somehow bypassing the cache limit either in the form of being able to get more WU's than 6 per core or using the Blox method of creating many caches? We the community can only do so much due to the low number of WU's that need to be validated as well as the fact that they are removed from the database rather quickly. And with the multiple cache method, having ones computers set to private would prevent a member of the community from seeing that it's been done. So I'm not just asking questions but perhaps helping with a solution... Perhaps use a script that checks to see if any computers have more than X WU's that are "In Progress" or more than X identical machines? Then you can deal with them as you feel necessary. Or a per account limit on the maximum number of tasks in progress for all computers on an account? Perhaps something like 1000 WU's "In Progress", which would make someone need to have 166+ CPU cores to be able to cache more. I doubt many have more cores than that on their account. A script that forced merged hosts at some random interval, say between 1 and 10 days or something so people couldn't plan for it, might be used to fix anyone using the multiple cache method as it would merge all the computers into one? -TJ |

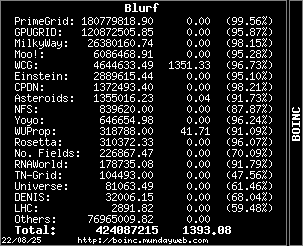

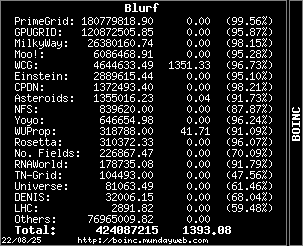

Blurf BlurfSend message Joined: 13 Mar 08 Posts: 804 Credit: 26,380,161 RAC: 0 |

Matt, Astromancer... We appreciate the thoughts you propose and Matt/Travis will work on the possibilities.. Fact is it's difficult to know with so many users but we are taking on a zero-tolerance policy for ppl who manipulate the limits. I guarantee we'll address them.

|

|

Send message Joined: 21 Nov 09 Posts: 49 Credit: 20,942,758 RAC: 0 |

Blurf, I know of course the Matt / Travis will be the people who do anything with planning or the like. I just don't like being a person with only questions and all, though being someone with suggestions can be just as bad I suppose! Ha! I'm glad that there is / will be a plan on how to find and what to do with those who are causing potential problems by hoarding the work. I just wanted to know that the post asking not to do it wouldn't be the only thing being done. And I'm glad that there is a bite to back up the bark. So thanks guys for all the work you do keeping this all running for our crazy hobby! And I hope everything sorts itself out nicely with this current crazyness. |

|

Send message Joined: 4 Jan 10 Posts: 86 Credit: 51,753,924 RAC: 0 |

we are taking on a zero-tolerance policy for ppl who manipulate the limits. I guarantee we'll address them. that's awesome... you prefer to fight against repercussions and users instead of reasons causing this. I think it's pretty evident that rule of thumb "6 WUs per CPU core" while crunching on video cards is not working for quiet time already. Let's take me as an example. I had dual core rig with 4890 and 4870. I used to get 12 WUs which gives 15 min buffer only. My plan was to get three 6970 which are three time faster then 48x0. So in this case the buffer is somewhat THREE minutes only. That's ridiculous... Why you do not want to make cruncher's life easier and finally change this (sorry to say) stupid rule when WUs limit for crunching by video card depends on qty of CPU cores? What's the logic behind this (sorry again) stupid rule? And pls do not tell me that it's impossible to track how many video cards any rig got. For example, PrimeGrid got 100Wus per video card limit, i.e. the rig with single video card gets 100WUs and with two video cards gets 200 WUs. PrimeGrid manage to implement this. So why you do not want to contact PrimeGrid guys? I do not think they will refuse to help you with ideas and even may be with code. What r u waiting for?

|

Blurf BlurfSend message Joined: 13 Mar 08 Posts: 804 Credit: 26,380,161 RAC: 0 |

CTAB---please refer to Travis' Front Page post.. Thank you. http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=2179&nowrap=true#45739.

|

|

Send message Joined: 23 Nov 07 Posts: 33 Credit: 300,042,542 RAC: 0 |

we are taking on a zero-tolerance policy for ppl who manipulate the limits. I guarantee we'll address them. First let me say that I've been around this project since the beginning and I don't post very much. This post has caused me to make an exception. Almost from the start here has been a WU limit. It has been explained over and over why there is a limit. It would seem to me that if you can afford to buy three 6970s, you should be able to afford to put them in decent machines. I have ATI cards running on single and dual core machines and don't have a problem with that. If you are so concerned with ths size of your cache you can always move them to another project but I doubt if you will. The credit you get here is the reason. You and others like you will continue to complain about the WU limit because it will run out during the ocasional outage. If you would setup a backup project you might hot get as much credit but you would keep your computers working. I just hope Travis and the others keep up all the good work they are doing. |

|

Send message Joined: 4 Jan 10 Posts: 86 Credit: 51,753,924 RAC: 0 |

Bigred, I really appreciate your time and that exception you did for me :-) But let's talk about the limits. You might be kidding me :-) If I'm putting three 6970s in one rig with dual core CPU the buffer is somewhat 3 minutes. That's is, man. And even if I'll get 8 core CPU, the still is literally nothing - 12 minutes only. You think that's enough? I don't think so. And on top of that we all know how often MW servers are down. This what I'm talking about - give us at least some reliability. I'm not asking for too much, right? Then, sure I can invest some money in new platform, but that does not solve the problem - remember, the buffer is 12min only. Let's make a simple math - $350 for i7-2600K (8 cores), $200-250 for good mobo and $150 for RAM, which gives us $700 approx. And will I get more in terms of crunching for MW? The answer is - NO. So, why I should do that? In my understanding it makes way more sense to spend this money wisely and to get two more cards and crunch more. Right? I do believe that this is a right way to go. You did not get me. All I'm talking about is to be smart and make crunchers' life easier. On the same dual core CPU in PrimeGrid I'm getting 200WU (100WUs per each card) and there is no necessity to waste money in platform upgrade. And due to this savings I can buy two more cards and crunch more. This what I'm talking about - change that stupid rule and send WUs based on video cards qty, but not CPU cores qty, exactly like PrimeGrid does. And in order to maintain proper work flow reduce deadline - just 1-2 days should be enough. Why MW can not do so? If you would setup a backup project you might hot get as much credit but you would keep your computers working. Trust me, I know how to use BAM! ans setup backup project you can always move them to another project but I doubt if you will. If you look in the 1st line of my signature, you'll see that I already did that :-) All I wanna do is to help Travis and the project to understand that there are better ways to move forward. If this will continue like that, MW will continue to loose crunchers. Just check formula-BOINC.org to understand what I'm talking about. Apart of AMD's epic failure with 69xx, I was fed up with my rigs babysitting and that is why I moved to another project.

|

|

Send message Joined: 21 Nov 09 Posts: 49 Credit: 20,942,758 RAC: 0 |

CTAP, The thing you seem to keep missing is that the generation of new WU's is done by using the ones returned. Server sends out 500 WU's, as it gets them back it's using those that are the best fit to generate new WU's that should give an even better fit. Lets say you can cache 200 WU's here and they take about a minute and a half to crunch. That's 5 hours that those WU's have missed out on getting better. Now I'm sure your going to say something about CPU's and having the WU's for more than 5 hours. Yes, it's true that they do. But why take the GPU's that are advancing things faster and then slow down the entire process by people processing out of date data? Who knows if giving us larger caches would in fact speed up the science by letting us crunch through the server downtimes or slow it down by most people crunching data that was not as relevant by the time they got to it. I'd imagine the latter is the case or they would have given us a larger cache or done away with the limits completely.

|

|

Send message Joined: 4 Jan 10 Posts: 86 Credit: 51,753,924 RAC: 0 |

Astromancer. OMG, you can read in my mind :-) Correct me if I'm wrong, but if deadline is EIGHT days that means that the project can wait 8 days to complete WU. Am I rite? If so, where's the logic? How come, that project missing 8 days to make WUs better??? If WUs should come back ASAP in order to impact new WUs generating and that is crucial for the project, I'll do the following if I'm in Travis shoes: 1. Make deadline literally 1 day 2. Due to item 1 - stop crunching on CPUs at all or make that WUs 10-15 minutes long 3. Increase cache up to, let's say, 100 WUs in progress per video card. In the perfect world, cache should depends on cards' GFLOPS in BOINC manager I understand that it's might be brutal towards CPU crunchers, but the project should go ahead. So, just say "yes" or "no" - does items above make sense? I hold 6970 in my hands when it just been released. On stock clocks it takes 70 secs per WU and 55-56secs @950MHz (max OCing at that time - due to CCC limitations). I do believe that 6970 can easily hit 1000 and with voltage tweaking - at least 1050 and even more. That means that it can 45secs per WU. So to crunch 100Wus takes 4500-4700secs (cache - 1h15min-1h20min). Not that much, but way better then we've got now, rite? In this case we keeping project moving ahead way faster (1 day delay rather then 8 days) and it's huge improvement against what we've got now in terms of cache. Faster cards crunching more, we depending way less on slower CPU which really causing project delay - what's the prblem? Why that pretty evident steps are not implemented yet? Good questions, but I can not answer that...

|

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

Astromancer. I believe it went from 3 to 8 days so that Boinc wouldn't keep running them in High priority mode. Everyone was getting tired of MW always running and not letting other projects have a go. Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

|

Send message Joined: 4 Jan 10 Posts: 86 Credit: 51,753,924 RAC: 0 |

banditwolf I believe it went from 3 to 8 days so that Boinc wouldn't keep running them in High priority mode. Everyone was getting tired of MW always running and not letting other projects have a go. In this case why are talking about "6 WUs per CPU core", cache and "how important to return WUs ASAP" at all? And why the project bothering with trying to catch some1 playing "games" instead of just increasing cache? And I still can not get what's going on and where's the logic (if any) behind that...

|

|

Send message Joined: 4 Oct 08 Posts: 1734 Credit: 64,228,409 RAC: 0 |

<sigh!> Go away, I was asleep

|

|

Send message Joined: 10 Dec 09 Posts: 18 Credit: 9,456,111 RAC: 0 |

... i believe BOINC bases it's "high priority" on estimates of WU estimates of completion, correlated to deadlines for each WU, to see which WU has a higher priority. in Milkyway's case, estimates are based on the completion time of the most recently completed WU. btw, relating to the original topic, if you examine blox's "computing army" you notice that as you go down, times of last contact go back about 30 min each host, meaning that every 30 min, the computer reset's it's Host ID in BOINC, where milkyway sees as a new host connected to the same user. they're all the same computer, 1 computer, just seen at different times doing the same thing, being logged as different computers |

|

Send message Joined: 4 Jan 10 Posts: 86 Credit: 51,753,924 RAC: 0 |

No comments on my posts? Looks I'm rite :-) |

banditwolf banditwolfSend message Joined: 12 Nov 07 Posts: 2425 Credit: 524,164 RAC: 0 |

No. You missed what was explained to you. Doesn't expecting the unexpected make the unexpected the expected? If it makes sense, DON'T do it. |

|

Send message Joined: 4 Oct 08 Posts: 1734 Credit: 64,228,409 RAC: 0 |

By several posters in detail. <sigh> Go away, I was asleep

|

|

Send message Joined: 10 Dec 09 Posts: 18 Credit: 9,456,111 RAC: 0 |

then allow me to restate: Our WU's in milkyway are special. each WU is not independent, rather, they are part of an evolving system. If we increase cache, we won't get WU's back as quickly as they should be back to complete the system, and hopefully improve the system as a whole (which is also why the WU's run as fast as they do) if you hoard 50 Wu's, by the time the 50th wu gets done, it might not make much of a difference anymore, as the system has moved on. aka, you missed the boat. the reason we don't have quick deadlines to correspond to the need of a quick turnaround is because the BOINC manager panics seeing such a short deadline, and tries to run all milkyway WU's as high priority, taking away resources form other possible projects running on a host. in other words, we need the Wu's to come back completed as fast as possible. the longer a WU is in a host, the less chance it will benefit the project. btw, @ CTAPbIi: the idea of caches based on GPU is a very good idea (and would make sense, because an 8 core system running an ATI 3600 probablly won't finish all 48 tasks as quickly as that system with 1 core and 4 ATi 6850's.) however, i don't believe BOINC manager has the ability to see the GPU as a separate variable in terms of caching WU's just yet, correct me if i'm wrong. however, caches need to be kept modest (not 100 WU's each GPU) as i stated above. |

©2024 Astroinformatics Group