Nbody 1.04

Message boards :

News :

Nbody 1.04

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 . . . 6 · Next

| Author | Message |

|---|---|

|

Send message Joined: 29 Dec 11 Posts: 26 Credit: 1,462,465,155 RAC: 33,627 |

It is bad on Windows too. Ties up the NVIDIA Card for too long. |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

It is bad on Windows too. Ties up the NVIDIA Card for too long. I don't understand that remark. According to the applications page there is only one app_version installed for Windows, and it doesn't have the (entirely inappropriate) opencl plan classes that can identify NBody as a GPU application under Linux. Certainly, there are some long tasks (WU 291391225 has reached 87% after 28 hours on my Windows machine), but the Windows NBody app uses CPU resources only. |

|

Send message Joined: 29 Nov 11 Posts: 18 Credit: 815,433 RAC: 0 |

Nbody computation error after 3hrs, sterr report: <core_client_version>7.0.28</core_client_version> <![CDATA[ <message> - exit code -1073740940 (0xc0000374) </message> <stderr_txt> <search_application> milkyway_nbody 1.04 Windows x86_64 double OpenMP, Crlibm </search_application> Using OpenMP 1 max threads on a system with 4 processors Warning: not applying timestep correction for workunit with min version 0.80 Using OpenMP 1 max threads on a system with 4 processors Using OpenMP 1 max threads on a system with 4 processors <search_likelihood>-62200.827114903703000</search_likelihood> </stderr_txt> ]]> No GPU so why is it sending it to me at all.?? |

|

Send message Joined: 20 Aug 12 Posts: 66 Credit: 406,916 RAC: 0 |

Please report the work unit numbers of some work units that error. We are currently looking at several issues. There will be an update very soon. Thanks to all for their help and feedback. We are working to have these issues resolved promptly. Jake |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

WU 290674321 That's the only WU which has errored for me this run (knock on wood), but all four replications failed - that seems significant. |

Ray Murray Ray MurraySend message Joined: 8 Oct 07 Posts: 24 Credit: 111,325 RAC: 0 |

Only wu 290721561 for me out of 47 completed. 2 wingmen with the same -1073741571 (0xffffffffc00000fd) Unknown error number and 1 with -1073741515 (0xffffffffc0000135) Unknown error number and zero runtime. 2 other WUs are so far inconclusive. |

|

Send message Joined: 20 Aug 12 Posts: 66 Credit: 406,916 RAC: 0 |

Thank you. Also, in regards to insanely long WU times, I believe they may just be estimated poorly. I have one that says 52 hours to completion that did 1% in 5 minutes. 500 minutes is clearly not 52 hours. Let them run unless they actually have been running for 30+ hours or something ridiculous. I don't know why the estimates are wrong though... Jake |

Ray Murray Ray MurraySend message Joined: 8 Oct 07 Posts: 24 Credit: 111,325 RAC: 0 |

This one has gone 39 hrs with about 2 hrs left. Progress % is increasing (and it's checkpointing) and estimate to finish is decreasing (although not incrementally with elapsed time) so it isn't stuck so I'm just letting it run to completion. It hasn't been sent to anyone else yet. It's a de_nbody_100K_104 rather than ps_..... |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

Thank you. WU 291391225 ran for 32 hours, completed and validated. (After an initial estimate of 120 hours) I've been trying to work out what's going on with the estimation, but failing utterly. My host is an i7-3770K, clocked at 4.5 GHz. The application details page says that for NBody tasks, it has an APR of 1613.9 (1.6 THz!) - the APR for standard Milkyway tasks is a much more sedate 6.58 GHz. The <app_version> section of my client_state.xml for NBody v104 contains <flops>44345657128.456566</flops> or 44.3 GHz. That figure, at least, seems to be unchanging, and coupled with the <rsc_fpops_est>19198700000000000.000000</rsc_fpops_est> value for my 32 hour task gives the original estimate of 120 hours. But the <rsc_fpops_est> values seem to be growing exponentially. I've just received a <rsc_fpops_est>12972200000000000.000000</rsc_fpops_est> (81 hours) estimate for WU 292161163, but the running speed (15% in 10 minutes - at least progress is linear) suggests it will finish in little over an hour. I fear you may be running into some of the boundary condition handling problems of http://boinc.berkeley.edu/trac/wiki/RuntimeEstimation. |

Jeffery M. Thompson Jeffery M. ThompsonSend message Joined: 23 Sep 12 Posts: 159 Credit: 16,977,106 RAC: 0 |

Ok we have another binary to take care of some checkpointing issues that may be related to some of these errors. I am in the process of testing it. Specifically computational errors and long computation times may be addressed by this. The gpu resources issue we will need to explore collectively a bit more. I do not have an answer for that at this time. I will continue to post details here. Until that is released and we will than start a separate thread. I am using this thread and the work units reporting data to make a list of errors and systems involved. Jeff Thompson |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

Just recorded the first "exit code -1073740940 (0xc0000374)" for this host, this run - WU 292476933. The only thing different from all the others was that BOINC restarted while the task was active: 07/01/2013 14:37:20 | | Starting BOINC client version 7.0.42 for windows_x86_64 Maybe this (Windows) version of the app has problems re-initialising memory when restarting from a checkpoint? "The exception code 0xc0000374 indicates a heap corruption" |

|

Send message Joined: 7 Jun 08 Posts: 464 Credit: 56,639,936 RAC: 0 |

Well, the hosts I have which can run nBody have had a pretty easy time running them so far (only CPU ones at this point). My only comment is since they are supposed to be MT apps, then it's probably not too good an idea to let more than one of them run at a time. Of course that could depend on a given hosts' local prefs, but I'm referring to a more or less 'default' settings host here. Previous versions that worked would take over the CPU for the duration of it's run when it came up in the task queue, and more than one nBody task NEVER ran at the same time. This is not the case for 1.04. Al |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

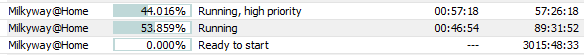

OK, may I claim the record for the highest runtime estimate so far? The third task below is for WU 290835322:  (it has <rsc_fpops_est>480966000000000000.000000</rsc_fpops_est>) And, while I'm at it, a n00b question prompted by a colleague: with no uploaded result data file, what science do you get at the end of all this work? Could I simply paste in <search_likelihood>-6438.250734594399500</search_likelihood> from my wingmate's result, and save myself 269,046.80 CPU seconds? Neither of us can find any other data returned by this app to the project. |

|

Send message Joined: 20 Aug 12 Posts: 66 Credit: 406,916 RAC: 0 |

The number you are reporting is a likelihood of fit. We give you a set of parameters which are then used by n-body on your computer to generate a model of a stellar stream. It is then compared to the input model. The number reported is actually the EMD (earth mover distance) of the comparison. In principle, we should be able to use n-body to take real data and fit orbital parameters to stellar streams. This is very useful for the science we are doing. I hope this answers your question. Jake |

|

Send message Joined: 15 Oct 12 Posts: 3 Credit: 18,270,747 RAC: 0 |

For me, 2 times same error ( - exit code -1073740940 (0xc0000374)) with WU 292055375 and WU 291398862 |

|

Send message Joined: 29 Dec 11 Posts: 26 Credit: 1,462,465,155 RAC: 33,627 |

My mistake, they are running on the cpu's only. Thanks for pointing it out. |

Overtonesinger OvertonesingerSend message Joined: 15 Feb 10 Posts: 63 Credit: 1,836,010 RAC: 0 |

Yes, You can have this RECORD. Very nice btw! :) I have also one of those strange-long WUs. My high-est estimated number of hours for an N-Body 1.04 is only 558 hours. But as it has had 1 percent after 12 hours CPU time, I guess it will be actually done after 120 hours on the one logical core of Core i7 720QM (1.73 GHz at 8 threads... , up to 2.8 TurboBoost with one thread). ... Sometimes I even let her to RUN alone, to speed her up ;) ---------------------------------------------------------------- And there is also HIGH probability that it will error out in the end... like on the other computer! See it here: http://milkyway.cs.rpi.edu/milkyway/workunit.php?wuid=291600433 Is it normal? Because - to me: This Work-Unit does not seem normal at all. :) Melwen - Child of the Fangorn Forest Rig "BRISINGR" [ASUS G73-JH, i7 720QM 1.73, 4x2GB DDR3 1333 CL7, ATi HD5870M 1GB GDDR5],bought on 2011-02-24 |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

Well, my record-breaker finished successfully in 150602 seconds (42 hours), but has been declared inconclusive despite agreeing with my wingmate to 19 significant digits. Now some other poor sucker (sorry, Shane!) gets to spend three days calculating -6438.250734594399500 all over again. My task finished without error, but I don't think that's a significant feature of the WU itself. Rather, I was careful not to restart BOINC during all that time: the task was pre-empted a couple of times, but kept in memory - so all it needed was a simple "resume" from the memory image, rather than a full "restart" from the checkpoint file. I'll test that theory of mine more fully in due course, but for the time being I've got WU 292870082 estimating 50 days still to run (and on target for a 60-hour run, after 45% progress). That'll delay me upgrading to BOINC v7.0.44 for a couple of days - see you all on Friday. BTW, we're beginning to get an idea where these estimates are coming from. I've posted before about the absurd APR (speed in Gigaflops) value my host is getting - today it's up to 1696.96 The server is supposed to tweak the task estimates by manipulating both <rsc_fpops_est> (workunit size) and <flops> (processor speed). It looks as if the size of the WU was calculated to suit a 1600 Gfl processor - it said half a zetta-fpop - but the transmitted <flops> was subjected to the John McEnroe sanity clause ("You cannot be serious!") and capped at (exactly - to 16 digits) ten times the CPU's Whetstone benchmark. Half a zetta-fpop at 44.3 gigaflops gives exactly the 3,015 hour estimate I screen-grabbed. |

|

Send message Joined: 29 Dec 11 Posts: 26 Credit: 1,462,465,155 RAC: 33,627 |

I am curious, what are we accomplishing by running these units on our computers instead of others, when they end up in errors? Also, wondering when the replacements will be sent out, that do not end in so many errors. |

|

Send message Joined: 4 Sep 12 Posts: 219 Credit: 456,474 RAC: 0 |

... what are we accomplishing ... Testing, and helping the developers find and eradicate any remaining bugs. State: All (20) | In progress (1) | Pending (7) | Valid (10) | Invalid (1) | Error (1) for host 465695 Only one error here, which I reported. Try not to close down BOINC while a MW task is in progress, until they fix it. |

©2024 Astroinformatics Group