AMD FirePro S9150

Message boards :

Number crunching :

AMD FirePro S9150

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · Next

| Author | Message |

|---|---|

|

Send message Joined: 13 Oct 16 Posts: 112 Credit: 1,174,293,644 RAC: 0 |

So is the MilkyWay code fixed to include "Hawaii" based GPUs now? Or is this still a manual fix? Thanks for the info neo. That's above my skill level so I would need something I could just download and overwrite on my Windows machine. |

|

Send message Joined: 2 Oct 16 Posts: 167 Credit: 1,013,272,730 RAC: 79,973 |

@BeemerBiker: There is thread somewhere about Nvidia Titan V running MH. In that thread, the author mentions about each WU uses about 1.5GB of VRAM. So you can run about 6 WUs on a S9100 (12GB) or 8-10 WUs on S9150 (16GB). That's an NV card though, which ends up using much more VRAM than the same task on an AMD card. 4x tasks in Win10 on my 280x is only using ~250 VRAM according to GPU-Z. Chalk it up to NV and OpenCL. The TV can't be fully utilized because if it but the Vega 7 or S9100 should be able to, esp with 16 and 12 GB of VRAM. |

|

Send message Joined: 31 Dec 11 Posts: 17 Credit: 3,172,598,517 RAC: 0 |

My guess (from an actual experience I had years ago) is that those 5 tasks are not completely "unzipped" before the coprocessor starts on them and the situation gets worse as more tasks are added. When I was running 20 concurrent (total of 100 on an s9100) I got a huge amount of valid tasks, but the number invalids was so high the total throughput was worse then when 4 were running. However, I could have left it running like that but it would have caused delays in validation for other users I was a wingman to. Looking at GPU usage while a single task is running I don't think the tasks run in parallel, but are rather crunched in series. Each of the bundled tasks spikes CPU usage at what appears to be the conclusion of the task. With multiple 7970s in the same system, I noticed a rather large drop in throughput (along with some crashes) when running more than three tasks concurrently, unless I gave each GPU adequate CPU resources. The CPU in the system at the time was an AMD R7 1700, so I was able to free up CPU resources to feed the GPUs. With 20 tasks running concurrently, the spike in CPU and IO overhead, as each of the bundled tasks completes, is going to be pretty high. This is especially true as the tasks sync up and start finishing/starting at the same time. It was that behavior which would choke my system, and occasionally crash the driver. Ultimately, rather than babysit the system, I just ran two tasks per GPU and suffered the slightly worse overall throughput for the sake of system stability. |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,463,985,753 RAC: 0 |

@BeemerBiker: There is thread somewhere about Nvidia Titan V running MH. In that thread, the author mentions about each WU uses about 1.5GB of VRAM. So you can run about 6 WUs on a S9100 (12GB) or 8-10 WUs on S9150 (16GB). i was using MinGW and attempting to build under windows. My ubuntu systems are all minimal. i would have to upgrade one to full desktop and add memory and larger disk for linux development. i was a longtime windows VS platform programmer and retired when my company switched to linux and corba. |

|

Send message Joined: 13 Oct 16 Posts: 112 Credit: 1,174,293,644 RAC: 0 |

@BeemerBiker: There is thread somewhere about Nvidia Titan V running MH. In that thread, the author mentions about each WU uses about 1.5GB of VRAM. So you can run about 6 WUs on a S9100 (12GB) or 8-10 WUs on S9150 (16GB). @BeemerBiker: If you get the Windows app compiled please share it if you don't mind :) |

|

Send message Joined: 28 Mar 18 Posts: 14 Credit: 761,475,797 RAC: 0 |

@BeemerBiker: There is thread somewhere about Nvidia Titan V running MH. In that thread, the author mentions about each WU uses about 1.5GB of VRAM. So you can run about 6 WUs on a S9100 (12GB) or 8-10 WUs on S9150 (16GB). confirmed, my Radeon 7 used about 1.1GB of VRAM running 7 tasks. Looks like the Radeon is having the same issue. <number_WUs> 5 </number_WUs> |

|

Send message Joined: 13 Oct 16 Posts: 112 Credit: 1,174,293,644 RAC: 0 |

Would be nice if @Jake Weiss fixed it in the client code and pushed it out already. He asked someone to put a pull request in and neofob was kind enough to do it and do the fix. So next step is Jake's. |

|

Send message Joined: 4 Mar 18 Posts: 23 Credit: 268,380,547 RAC: 0 |

Would be nice if @Jake Weiss fixed it in the client code and pushed it out already. He asked someone to put a pull request in and neofob was kind enough to do it and do the fix. So next step is Jake's. Actually Jake merged my PR. The binary is not pushed out to public for some reason. The current one 1.46 is built way back in March 2017. |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,463,985,753 RAC: 0 |

This is an old thread but I would like to mention that I am no longer getting invalid work like I used to. Something has changed for the better. Running 5 concurrent work units on a any S9x00 GPU use to generate 1 invalid for every 5-6 valid ones but they are all valid now (keeping my fingers crossed of course) Measured 730 watts used by this 4 GPU Z400 system. Above 5 concurrent tasks per GPU things slow down.   |

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

This is an old thread but I would like to mention that I am no longer getting invalid work like I used to. Something has changed for the better. Running 5 concurrent work units on a any S9x00 GPU use to generate 1 invalid for every 5-6 valid ones but they are all valid now (keeping my fingers crossed of course) Measured 730 watts used by this 4 GPU Z400 system. Above 5 concurrent tasks per GPU things slow down. That's odd the invalids would be such an issue at 5 and above for that card. My RX 550 can run 8 concurrent on it's 4GB RAM with 5.5% invalids and only 2% loss in credit/sec over 4 WU at once. The step from 7 to 8 WU was only an increase of 5.3% to 5.5%. Though, it's performance is only 0.533 credit/sec at 8 WU and 0.544 c/s at 4 WU. Tests above 8 WU were stopped by limited base RAM, so purchased 16gb and a Sapphire Tri-X 280x to perform the same WU group tests runs on. Here's the list of my results from a spreadsheet with 90-120 results at each WU level. |

|

Send message Joined: 10 Mar 13 Posts: 9 Credit: 523,622,956 RAC: 0 |

Also interesting, I was just checking the forums as I've recently noticed I now getting a lot of invalids. I'm only running 3 WU per card and haven't changed anything recently |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,463,985,753 RAC: 0 |

Also interesting, I was just checking the forums as I've recently noticed I now getting a lot of invalids. I'm only running 3 WU per card and haven't changed anything recently State: All (11325) · In progress (162) · Validation pending (0) · Validation inconclusive (1060) · Valid (10094) · Invalid (0) · Error (9) Application: All (11325) · Milkyway@home N-Body Simulation (0) · Milkyway@home Separation (11325) Your computers are hidden so just guessing: Do you have the same drivers as I do as shown in my event file image below? [edit] additional info on my drivers was added. if image not working add www to url  |

|

Send message Joined: 10 Mar 13 Posts: 9 Credit: 523,622,956 RAC: 0 |

Yes, except I didn't install mantel or audio |

|

Send message Joined: 10 Mar 13 Posts: 9 Credit: 523,622,956 RAC: 0 |

Switched to one WU per GPU, no invalids. Also tried newer drivers (with clean install, using DDU) but PC would crash. May try an even older driver |

|

Send message Joined: 10 Mar 13 Posts: 9 Credit: 523,622,956 RAC: 0 |

downgraded driver to 18.Q3, but still getting invalids when running multiple WU per GPU |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,463,985,753 RAC: 0 |

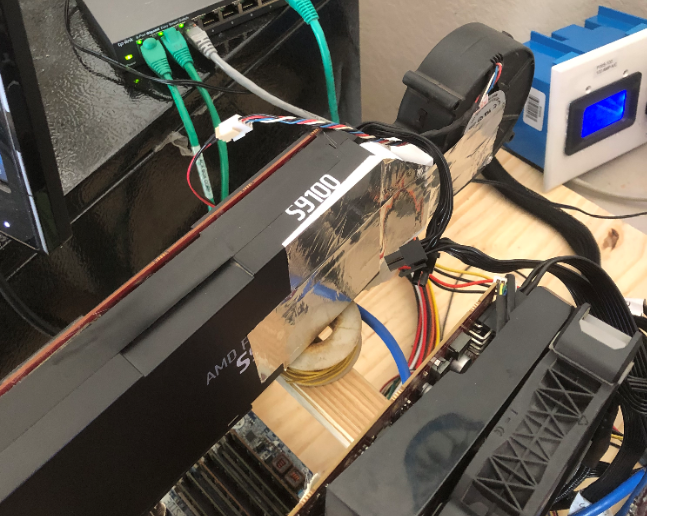

Not sure what is going on, sorry. Guessing: did you enable ECC on the board? My 9100 has a high power blower and it barely cools the board. I had to tape it on as shown cuz it fell off once and the system shut down in seconds. Your 9150 runs a lot hotter I suspect. One of the fans on that adjacent S9000 quit spinning and I temporarily put a 120mm butted up against it.  |

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

I was thinking of getting one of these, for the co-processor PCIe slot in my IBM M-series server, this coming winter. The CPU fans are to blow air through the board... but in spring and early fall, the CPU's are downclocked to minimum and the fans stay around 1000 RPM. Not sure it would be properly cooled unless it also was downclocked or set to a cooler running project like Amicable Numbers (which will be done with ^20 by fall, unsure of it's future). You've been able to get MSI (or Linux equiv app) to properly downclock, downvolt or down power your S9150? |

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

downgraded driver to 18.Q3, but still getting invalids when running multiple WU per GPU The newest drivers (19.4.3, 19.3.1) ended up getting no invalids on 4+ WU per on the 280x. 18.7.1 had a small speed increase but also had 5% invalids over 4WU. BTW, it's the last driver that works properly on Enigma GPU WU's. Had to drop to 17.11.4 to get Amicable Numbers to work most efficiently. 17.11.4 also was the only driver of about 9 I tried that gave MSI Afterburner actual control over the power slider on my RX 550. 18.5.2 also worked efficiently on Amicable Numbers, for both 280x and RX 550, but no power sliders. Sorry, haven't gotten around to testing 17.11.4 on Milkyway because Amicable is nearing the end of their ^20 dataset and hunting a WUProps badge and some Mag. |

|

Send message Joined: 10 Mar 13 Posts: 9 Credit: 523,622,956 RAC: 0 |

Actually, I've got a 9100. What temperature is yours running? Mine runs about 180F-195F. ECC is enabled and indicates no errors. |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,463,985,753 RAC: 0 |

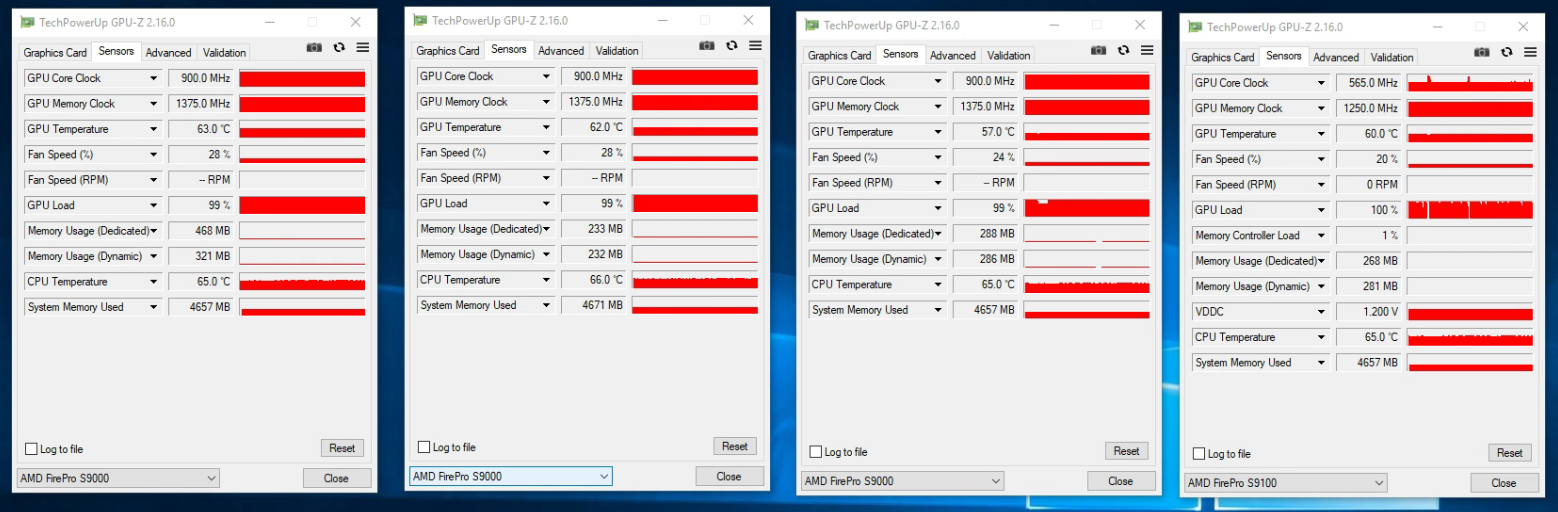

I have to stop BOINC before bringing up GPU-z or system hangs. There is nothing obvious wrong looking at event logs, not sure what causes this. My S9x00 temps are all much lower than 180-195f Your temps seem high compared to mine in mid 65c (149f) for three S9000 (same core as HD7950) and one S9100. I found some max temps here but our firepros are not listed The w9100 reviewed by tom shows 92-93c which is what you see. so I guess it is ok https://www.tomshardware.com/reviews/firepro-w9100-performance,3810-16.html does you clock on your S9100 vary like mine? I show 550-650 and rarely hit the design of 825 as you can see in the graph.  [/url] [/url] |

©2025 Astroinformatics Group