My number of "invalids" decreased. How is this possible?

Message boards :

Number crunching :

My number of "invalids" decreased. How is this possible?

Message board moderation

| Author | Message |

|---|---|

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,461,693,501 RAC: 0 |

I am looking at a problem with one of my systems that is reporting invalid tasks. The more concurrent tasks I run, for example, 20 at a time, the percentage of invalid tasks increase. When running one task at a time it seems I get no invalid work units generated at all. I have been picking and choosing drivers and varying the % cpu assigned to get best performance on my S9100 which seems to have several drivers available. Just a few hours ago the number of invalid units, as reported by your database, dropped from over 140 down to 42 on the system I am debugging. An invalid result is one that is different from the "wingman" and the task must be sent to a 3rd system to determine which answer is correct. Since, on at least 140 of my units, I had failed the validity check, how is it now that things have changed and I no longer have that many invalid checks? I double checked ono of my failing work units but it is no longer available. I understand raw data and results are deleted on account of disk storage but I would think the number of units processed would always be available. |

mikey mikeySend message Joined: 8 May 09 Posts: 3339 Credit: 524,010,781 RAC: 0 |

I am looking at a problem with one of my systems that is reporting invalid tasks. The more concurrent tasks I run, for example, 20 at a time, the percentage of invalid tasks increase. When running one task at a time it seems I get no invalid work units generated at all. I have been picking and choosing drivers and varying the % cpu assigned to get best performance on my S9100 which seems to have several drivers available. No the numbers are not cumulative, I have been crunching for MW with my gpu's since 2009 and my stats are: State: All (1892) · In progress (177) · Validation pending (0) · Validation inconclusive (135) · Valid (1580) · Invalid (0) · Error (0) There is no way that's all the workunits I have ever done, with ZERO invalid or errors!! You can see my credits and rac under my name, I have had TONS of workunits that came back as invalid or with errors that are no longer showing up. |

|

Send message Joined: 25 Feb 13 Posts: 580 Credit: 94,200,158 RAC: 0 |

Hi BeemerBiker, It looks like the likelihood calculation is actually not being completed correctly for some small percentage of your work. Is it possible that you are running more runs than your card has memory for? Since we've moved to bundling workunits the size of a single run has increased to 500 MB on the card. Normally if you run out of memory OpenCL is supposed to throw an error, but in my experience, OpenCL sometimes fails to do what you expect it to. Jake |

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,461,693,501 RAC: 0 |

I did not know that you were bundling runs. Is that what the "<number_Wus> 5 </number_Wus>"

|

Joseph Stateson Joseph StatesonSend message Joined: 18 Nov 08 Posts: 291 Credit: 2,461,693,501 RAC: 0 |

Hi BeemerBiker, I got to digging deeper into this as there should be no reason why I am getting invalidate errors on my S9100 as a function of the number of concurrent tasks. Another user, melk also discovered the same behavior. I went to my list of invalids and found some strange statistics, one of which I want to share. First, these co-processors, are 3x as fast double precision as a typical HD7950 at the same speed (~850mhz). FP64 is 2620 compared to 717. They also have 12gb or more memory compared to 3gb. Granted that opencl probably cannot access over 4gb and possible uses way less than that. However, the behavior I see is that when I add additional concurrent tasks, The S91xx devices start generating invalidate errors exponentially up through about 20 concurrent WUs (all I tried), while the HD7950s generate a boatload of errors starting after 5 concurrent units. Sometimes so fast that I go through all 80 I am allowed before I can suspend the project. I have a program here that I use to compute performance information when setting up a project to use concurrent tasks. What I observed I can summerize as follows HD7950: no invalids and can run 4 - 5 concurrent but it looks like after 3 concurrent there is no addional benefit in throughput and after 5 all h-ll breaks loose. S9100 1 work unit generates no invalids that I can see 2 work units may generate an invalid. Not sure as I dont have time to watch it and your policy (like all projects) drops the invalid count as data is removed form the server to make room. 3 concurrent work units probably generate invalidates but if I start with, say, 320, it make take 4-5 days before that number drops to, for example, 100 4 concurrent work units generate enough "invalidate" errors to consistently stay at 320 invalids as I noticed it hoovered at 320 without changing much over a weeks time. I finally dropped ngpu to 3 as I didnt want other users to have to reprocess my invalidates. This gets much worse... I ran 20 WUs for a short time and about one out of four were invalid. This system is extremely fast producing a result in 35 seconds and my run at 20 concurrent tasks for 30 minutes produced 800 invalid work units. I was not aware of this until about an hour after I started my performance test when I got around to looking at the results. The fact that 3 out of 4 were computed correctly indicates there is a software problem in handling how work units are added or removed from the GPU. This could be an opencl problem, not milkyway as the driver is different. For example, looking at other users S91xx (total of 3 users here) I also see the following warning in the "Task Details" of all results, both valid and invalid

C:\Users\josep\AppData\Local\Temp\\OCL2292T3.cl:183:72: warning: unknown attribute 'max_constant_size' ignored

__constant real* _ap_consts __attribute__((max_constant_size(18 * sizeof(real)))),

^

C:\Users\josep\AppData\Local\Temp\\OCL2292T3.cl:185:62: warning: unknown attribute 'max_constant_size' ignored

__constant SC* sc __attribute__((max_constant_size(NSTREAM * sizeof(SC)))),

^

C:\Users\josep\AppData\Local\Temp\\OCL2292T3.cl:186:67: warning: unknown attribute 'max_constant_size' ignored

__constant real* sg_dx __attribute__((max_constant_size(256 * sizeof(real)))),

^

3 warnings generated.

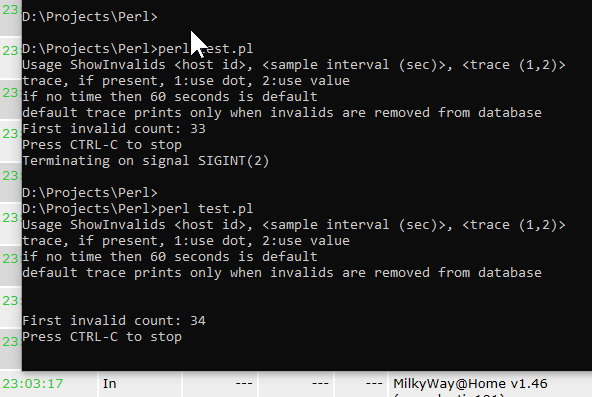

I do not see the above warning in any of my HD7950 systems and the drivers are different. I noticed some other problems but will post on a separate thread. Question: Is there any command line parameters or config setting that can enable any type of debugging information? I would like to debug this problem and see if there is a fix. I am guessing that possbily the tasks are being inserted and removed from the GPU too fast and possibly (just a guess) the results are not being allowed to be fully "saved" before it is removed from the GPU. |

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

Was there a final conclusion on the number of invalids from running concurrent on BeemerBiker's S9100 card? My RX 550 with 4GB RAM should be able to run 8 WU at once (Jake Weiss: "Since we've moved to bundling workunits the size of a single run has increased to 500 MB on the card.") but about 30-50% were invalid. GPU-Z reports that video RAM used stayed under 2600MB of 4096MB available. At 6 and now at 4 WU at once it's still throwing a high rate of invalids but it will take 24 hours to get the results. When looking at the WU results, it doesn't appear that they differ from the other two wingunits. EDIT: vseven's message shows that their nVidia was using 1800MB per WU. Is this an under reporting of RAM used by GPU-Z or do ATI OpenCL WU's use that much less RAM? |

Keith Myers Keith MyersSend message Joined: 24 Jan 11 Posts: 715 Credit: 555,620,919 RAC: 41,897 |

Is that correct? if so, since BOINC reports just under 4gb of memory on ny systems, then only 1gb is actually available? Forget what BOINC reports for available gpu memory. BOINC is only coded to report as a 32 bit app so 4GB is the max reported. OpenCL on the other hand DOES see all of a gpu's global memory. It also still is limited to accessing 26% of the global memory. So for a 8GB global memory card, it has access to approximately a little over 2GB of memory to perform its calculations.

|

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

Is that correct? if so, since BOINC reports just under 4gb of memory on ny systems, then only 1gb is actually available? Thanks for the response. I misreported GPU-Z above; it saw under 1024MB in use. So on this RX 550 GPU with 4096MB, OpenCL 1.2 is only going to have access to 1024MB, and since Weiss says each WU needs 500MB, this RX 550 should be able to run 2 WU successfully. That's what it is attempting tonight. Jake's S9100 with 12,000GB should, hypothetically, run 6 WU at once as OpenCL will see 3100MB and each needs 500MB, but he reports errors after the 4th WU. Something still seems amiss. I still have questions: 1) Why did the RX 550 successfully run over half of the WU that it ran 8 at a time, 6 at a time and 4 at a time? 2) What is swapping the WU's access to the 1024MB that OpenCL sees? Is it the GPU's internal memory management code, the Catalyst driver code or OpenCL code? 3) Even if the WU's are sharing less than optimal RAM, they still should complete with valid results; albeit much more slowly than 1 WU at a time. What is causing the invalid results? As I said earlier, the results appear to match the wing WU's but it's still marked invalid. |

Keith Myers Keith MyersSend message Joined: 24 Jan 11 Posts: 715 Credit: 555,620,919 RAC: 41,897 |

I still have questions: Don't know. These are questions for the science app developers and the graphics driver developers. How any specific card responds to requests to use the cards global memory has too many variables and permutations in chip architecture, driver design, API design and science app design.

|

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

My test machine is currently running 6 OpenCL WU at a time on the RX 550 with 4GB VRAM. Not one invalid. GPU-Z reports 322MB dedicated and 339MB dynamic RAM used on the GPU. So that's about 54MB dedicated per WU and 57MB dynamic. If 1024MB is the limit on OpenCL then I expect to have failures at 10WU at a time , although my system RAM might run out first. I was using Adrenaline 18.7.1 (Windows store 24.20.12019.1010) because it was the latest driver that Enigma@home could use. Versions after 18.7.1 have a set of libraries updated by AMD that Enigma isn't compatible with (see discussion). Also, there is the issue with latest Adrenaline and Forceware drivers shutting down the competing manufacturers' GPU OpenCL abilities. My RX 550 is from a Dell workstation so I grabbed their latest driver, and it is an odd one, but it fixed the problem with invalid Milkyway WU run more than 1 at a time. Has a driver version of 25.20.14003.2000 (Windows 10, October 2018) but GPU-Z reports it as Adrenaline 17.12 (December 2017). The 1060's OpenCL abilities were shut down by that driver so back to trying to figure out how to update the windows registry for both cards to have OpenCL or I'll be stuck with only CUDA projects on the nVidia card. TL;DR BeemerBiker's invalids were likely from the Adrenaline driver version they had installed. The latest Dell driver for Windows 10 for my RX 550 fixed the issue and it's running 6 WU at once (and likely will do 9) without any invalid WU's. There are performance increases at every even WU bump, but only ~5%. (0.48 credit/sec =1WU, 0.51 c/s = 2/3 WU, 0.54 c/s = 4/5 WU) |

|

Send message Joined: 13 Oct 16 Posts: 112 Credit: 1,174,293,644 RAC: 0 |

I'm still trying to figure out why your running 6 WUs at once on a RX 550. Doesn't sound optimal to me for points. |

|

Send message Joined: 12 Dec 15 Posts: 53 Credit: 133,288,534 RAC: 0 |

I'm still trying to figure out why your running 6 WUs at once on a RX 550. Doesn't sound optimal to me for points. Well, I wanted to have the dataset ready before answering this: Here's the results for the RX 550 (number in parens is credit/sec if no invalids were occurring) 1 WU: 0.481 ↠credit/sec 3.0% ↠CPU/WU 7.70 ↠minutes 0.0% ↠invalids 24 ↠hours per day at WUProps 2 WU: 0.505 ↠credit/sec 2.3% ↠CPU/WU 14.99 ↠minutes 0.0% ↠invalids 48 ↠hours per day at WUProps 3 WU: 0.510 ↠credit/sec 1.8% ↠CPU/WU 22.28 ↠minutes 0.0% ↠invalids 72 ↠hours per day at WUProps 4 WU: 0.540 ↠credit/sec 1.4% ↠CPU/WU 33.87 ↠minutes 0.0% ↠invalids 96 ↠hours per day at WUProps 5 WU: 0.532(0.544) ↠credit/sec 1.0% ↠CPU/WU 44.59 ↠minutes 2.4% ↠invalids 120 ↠hours per day at WUProps 6 WU: 0.525(0.544) ↠credit/sec 0.80% ↠CPU/WU 52.46 ↠minutes 3.3% ↠invalids 144 ↠hours per day at WUProps 7 WU: 0.520(0.549) ↠credit/sec 0.68% ↠CPU/WU 60.81 ↠minutes 5.3% ↠invalids 168 ↠hours per day at WUProps 8 WU: 0.534(0.566) ↠credit/sec 0.63% ↠CPU/WU 63.19 ↠minutes 5.5% ↠invalids 192 ↠hours per day at WUProps So 4 WU peaks the credit/second but 8 WU has distinct advantages that make it better than 4 WU if you care about your CPU projects (my GRC Mag for CPU projects are severely better than the GPU projects) and if you are working on your WUProps badges. 8 WU at once is only 0.534/0.544 = 1.9% less credit per second but doubles the WUProps badge gains and cuts CPU usage per WU in half. If the invalid issue could be eliminated, at 0.566/sec, 8 WU would be the absolute best option. (I bought a 280x Sapphire Tri-X and will retest with that card.) |

©2024 Astroinformatics Group