Validation inconclusive

Message boards :

Number crunching :

Validation inconclusive

Message board moderation

Previous · 1 . . . 3 · 4 · 5 · 6 · 7 · 8 · 9 . . . 18 · Next

| Author | Message |

|---|---|

HRFMguy HRFMguySend message Joined: 12 Nov 21 Posts: 236 Credit: 575,038,236 RAC: 0 |

The simplest method to get the beginning of any task category is to use the offset value in the URL. Wow. I sure as hell missed that! Your post is a good candidate for the MW user guide! Thanks! |

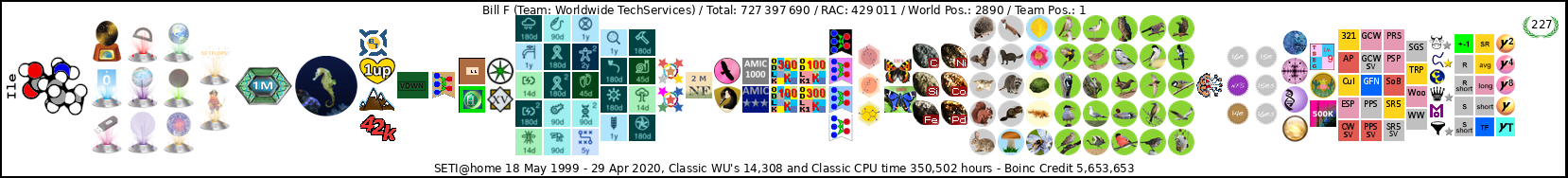

Bill F Bill FSend message Joined: 4 Jul 09 Posts: 108 Credit: 18,317,753 RAC: 2,586 |

Keith Meyers is knowledgeable and helpful on many project forums. An asset to the community. Bill F In October of 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

mikey mikeySend message Joined: 8 May 09 Posts: 3339 Credit: 524,010,781 RAC: 0 |

For N body, I have 9766 validation inconclusive, 382 valid, 9 errors. You can also change the number at the end of the line, ie it starts out at 20, then 40, then 60 but you can put 220 or 2220 or 4860 and jump to those tasks, then you page up or down from there |

HRFMguy HRFMguySend message Joined: 12 Nov 21 Posts: 236 Credit: 575,038,236 RAC: 0 |

For N body, I have 9766 validation inconclusive, 382 valid, 9 errors. also good to know. |

|

Send message Joined: 8 Nov 11 Posts: 205 Credit: 2,905,403 RAC: 0 |

I may be missing the point but I am baffled as to why the number of Nbody unsent is not really going down, it has shifted by less than 1/2 a million in the last couple of days, yet the same amount is being processed at any one time. Each WU takes say 3 minutes or less on average so why aren’t the numbers dropping, in a day millions of WU’s are being completed. The waiting for validation are very small in the server figures so they are not there, it did hit 73000 at one point but last time I looked it was 550. It would be nice to see how big the total number of Validation Inconclusive is, in theory it must be millions. Looking at the last server figures the number of unsent is going up ? |

|

Send message Joined: 13 Apr 17 Posts: 256 Credit: 604,411,638 RAC: 0 |

I may be missing the point but I am baffled as to why the number of Nbody unsent is not really going down, it has shifted by less than 1/2 a million in the last couple of days, yet the same amount is being processed at any one time. Each WU takes say 3 minutes or less on average so why aren’t the numbers dropping, in a day millions of WU’s are being completed. The waiting for validation are very small in the server figures so they are not there, it did hit 73000 at one point but last time I looked it was 550. It would be nice to see how big the total number of Validation Inconclusive is, in theory it must be millions. Looking at the last server figures the number of unsent is going up ? Maybe I am also missing out on something, but: The way I understand it, is that "in progress" means a task has been sent out (only), but not running/crunching. I have many tasks in my queue, but they are not running - just one at a time. But they are counted/displayed as "in progress". So, if we all had no queue, just one task present and actively crunchen/running, then your logik would be correct? The sun is shining and we should be getting outside ... |

|

Send message Joined: 16 Mar 10 Posts: 218 Credit: 110,420,422 RAC: 3,848 |

Septimus, The state of any task (aka result) is stored in several flags, and I think the problem here might be that the query that populates the server status "unsent tasks" items only checks one of the flags! A little more detail... Each result record has a field called server_state, one of the values of which indicates an unsent task - all the other possible values of that field relate to basic status (e.g "In progress" or "Completed"). There is another field called outcome, and that is where such states as "Success", "Error" and "Validation error" are recorded. It is zero until the task fails to be sent, is marked as "don't need", or is returned. The [unmodified] code of the server version that MW seems to be using just checks server_state for the value 2 (which is Unsent); it probably ought to also check outcome for zero rather than taking that on trust! I don't think that the actions Tom has taken to thin out the tasks that are candidates for being sent can change server_state until the actual work units go away completely, there being no suitable value for the server_state field itself (and it's probably only the server status page that is confused by that lack!) If I'm right about this, any fluctuations visible in the unsent values will reflect results returned (reduction!) and retries requested (increase!) until the "don't need" work units are either removed or turned back into "can send" (if that's possible). In the former case the number should drop like a stone, and the latter would only be sensible once the backlog of "live" work-units is cleared. I hope the above makes some sort of sense :-) Cheers - Al. Note - I don't run a BOINC server so my answer is based on a code dive rather than first-hand experience! I have looked at the PHP code of the server_status page, but there's no guarantee that the MW folks haven't tweaked it (or that I've looked at the right version...) [Edited to add "don't need" to the "outcome" description.] |

|

Send message Joined: 8 Nov 11 Posts: 205 Credit: 2,905,403 RAC: 0 |

Thanks guys for your explanations, very helpful. I guess I will have to be a bit more patient. |

|

Send message Joined: 11 Mar 22 Posts: 42 Credit: 21,902,543 RAC: 0 |

I took a closer look into my NBody WUs in progress and the WU that are listed as "validation inconclusive", which have added up to 3511 by now. All of those are WUs were only one copy has been sent by the server, the "wingman" WUs are listed as "unsent". My current NBody WUs in progress show three copies, e.g. one completed on 8 March, my WU (in progress) which was sent on 19 April, and one that was sent on 11 March and timed out 23 March. Looks like the server is sending out a second WU copy with a delay of at least 4-5 weeks, for whatever reason. This results in a huge amount of WUs shown with a copy marked as "unsent". |

|

Send message Joined: 13 Apr 17 Posts: 256 Credit: 604,411,638 RAC: 0 |

... with a delay of at least 4-5 weeks, for whatever reason ... The reason, as you surely know, is a disk crash a couple of weeks ago. So it will take a looong time to catch up ... |

mikey mikeySend message Joined: 8 May 09 Posts: 3339 Credit: 524,010,781 RAC: 0 |

I took a closer look into my NBody WUs in progress and the WU that are listed as "validation inconclusive", which have added up to 3511 by now. All of those are WUs were only one copy has been sent by the server, the "wingman" WUs are listed as "unsent". Someone said they don't send the 2nd task until after the 1st task is returned, if true that sounds crazy to me, but it would mean that all those 2nd, and more tasks, are at the back end of the queue as the tasks are sent in FIFO order. I can understand the 3rd and more tasks not being sent until one of the first two tasks didn't validate but if you KNOW you will need a 2nd task anyway why not send it out when you send out the 1st task? It just can't go to the same person that the 1st task was sent too. |

|

Send message Joined: 14 Feb 22 Posts: 12 Credit: 3,249,186 RAC: 0 |

mikey wrote: Someone said they don't send the 2nd task until after the 1st task is returned, if true that sounds crazy to me, but it would mean that all those 2nd, and more tasks, are at the back end of the queue as the tasks are sent in FIFO order. I can understand the 3rd and more tasks not being sent until one of the first two tasks didn't validate but if you KNOW you will need a 2nd task anyway why not send it out when you send out the 1st task? It just can't go to the same person that the 1st task was sent too.I noticed almost all my GPU Separation tasks get validated after I return results (task ..._0), thereby not necessitating a second task to be issued to someone else. However, for N-body tasks on the CPU, it's a completely opposite observation. Almost all go into a "validation inconclusive" status at which point a task is created and queued up to be issued to a wingman to attain quorum. Is there a reason why the two validators are so different? If the N-body validator were able to validate results upon receipt of the initial result and not deem it inconclusive, it'd be a big win to reduce the current backlog by not adding more tasks to the queue. Alternatively, if the nature of this validator is to treat a majority of initial results as inconclusive, perhaps it would make sense, like you said, to send out the first two tasks at the same time. Edit: Found this. If this is the code for the validator, it would probably explain why my CPU tasks (and probably others who have pointed their CPUs at N-body) fail to get successfully validated after the first issued task is returned as our clients haven't met the requirements to pass adaptive validation. If so, new clients crunching N-body tasks would probably not help with the queue as our tasks would all require a wingman to validate our results, and it'll be several weeks if not months before the tasks are issued to the wingmen. If there's a way to influence the assigner to prioritise non _0 tasks for assignment, that might help get more clients be eligible to pass adaptive validation, and therefore prevent new tasks from being created. This paper from 2005 indicates this is possible ("The queue on the BOINC server is FIFO by default but later versions of BOINC supports prioritizing among WUs") but I haven't been able to find the options in the BOINC project wiki or the background tasks wiki. |

|

Send message Joined: 20 Nov 07 Posts: 54 Credit: 2,663,789 RAC: 0 |

^ Really good post. Hoping Tom replies. |

|

Send message Joined: 8 Nov 11 Posts: 205 Credit: 2,905,403 RAC: 0 |

It’s mentioned on the Nbody WU Flush thread that wingman, second tasks are not sent until 30 days after the first task is received back. I can confirm that as I did over 100 tasks today as a “wingman†that they were all sent out today exactly 30 days after the first task was received back. |

|

Send message Joined: 16 Mar 10 Posts: 218 Credit: 110,420,422 RAC: 3,848 |

It’s mentioned on the Nbody WU Flush thread that wingman, second tasks are not sent until 30 days after the first task is received back. I can confirm that as I did over 100 tasks today as a “wingman†that they were all sent out today exactly 30 days after the first task was received back.I think you'll find that the long delay is/was simply a result of the huge backlog of NBody tasks (which now appears to have been cleared, so let's see how quickly it catches up.) Supposedly new tasks also appear to have been waiting to be sent out since about the same time! The same sort of thing happened with Separation tasks for several weeks; with the backlog being smaller, it caught up slowly but surely - patience was the main requirement :-) Cheers - Al. P.S. I eventually took a "CPU work only" system off another (temporary, during WCG down-time) project and let it do Nbody work so I could get first-hand experience of what happens to initial tasks and retries at present. It has been a useful insight, but when WCG returns, it goes back there... |

|

Send message Joined: 8 Nov 11 Posts: 205 Credit: 2,905,403 RAC: 0 |

Hope you are right Alan…there are a lot of Inconclusives to shift I suspect. |

|

Send message Joined: 11 Mar 22 Posts: 42 Credit: 21,902,543 RAC: 0 |

The reason, as you surely know, is a disk crash a couple of weeks ago. Well, I disagree. To me it looks like the configuration is broken. For each WU created, a second copy should be sent out (at least a second, better 3) simultaneously as the default. For the time beeing, the server is popping out single WUs only - and that doesn't make sense to me, because it was not the way before the server trouble. So it's not just enough to replace the failed HDD with a backup but also the configuration scripts have to be checked and adjusted. This setup is not the normal way it should work. |

|

Send message Joined: 13 Apr 17 Posts: 256 Credit: 604,411,638 RAC: 0 |

The reason, as you surely know, is a disk crash a couple of weeks ago. This situation has been discussed more than once. What I see on my side is that everything is working as it should be. Maybe other sharp crunchers can chime in and solve the mistery .... |

Tom Donlon Tom DonlonSend message Joined: 10 Apr 19 Posts: 408 Credit: 120,203,200 RAC: 0 |

To me it looks like the configuration is broken. For each WU created, a second copy should be sent out (at least a second, better 3) simultaneously as the default. For the time beeing, the server is popping out single WUs only - and that doesn't make sense to me, because it was not the way before the server trouble. So it's not just enough to replace the failed HDD with a backup but also the configuration scripts have to be checked and adjusted. From what I see, the validation process (at least in terms of sending out WUs) is working correctly. We cannot send out 2 or 3 WUs immediately, because (i) not every WU gets checked against wingmen, (ii) The process that oversees this is semi-random and based on the user's statistics (how often they return invalid WUs), so it cannot be determined ahead of time, and (iii) There is no guarantee that all of those WUs would get returned in a timely manner to be checked against each other, and we would often have to send out more WUs in addition to those initial 2 or 3 anyways,. |

|

Send message Joined: 13 Apr 17 Posts: 256 Credit: 604,411,638 RAC: 0 |

+1 and by the way, this is how things have always been handled ... |

©2025 Astroinformatics Group