Per Host Limit

Message boards :

Number crunching :

Per Host Limit

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 17 Nov 07 Posts: 17 Credit: 663,827 RAC: 0 |

Hello To all fellow crunchers here at milkyway@home I noticed a while ago in the messages tab: 19/11/2007 15:46:11|Milkyway@home|Message from server: No work sent 19/11/2007 15:46:11|Milkyway@home|Message from server: (reached per-host limit of 8 tasks) Is this right? Are there new limits being set? As previously the quota was set in the thousands per host. Kind Regards, John Gray :0)

|

DaveSun DaveSunSend message Joined: 10 Nov 07 Posts: 28 Credit: 2,549,231 RAC: 0 |

Hello To all fellow crunchers here at milkyway@home At the moment there is a limit of 2000 units per core per day. But the project is set to limit the number of units per host to 8 at one time. As these are reported back you receive more. The only people that might have a major problem with this would be those that don't have a continuous connection and run this project exclusively. |

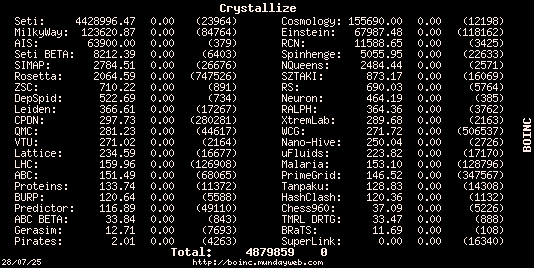

Crystallize CrystallizeSend message Joined: 12 Nov 07 Posts: 31 Credit: 123,621 RAC: 0 |

But to be realistic only 8 WUs only take an hour to complete at most, I suppose it's ok for those in US, but for us in other parts of the world other issues also matters. Latency time and/or errors while up-/down-loading etc, etc. I think WUs with longer work at one time is necessary in the long run ... ... and that rather sooner than later ! (I don't know about you, but I only get about 4- max 5 Wus per cycle, not 8...  ) )

|

|

Send message Joined: 17 Nov 07 Posts: 17 Credit: 663,827 RAC: 0 |

Hello, Many thanks for your reply's Now i understand better thanks to you :0) I personally think 2000 work units /core is extremly generous. Even if i had my full compliment of crunchers on the go i could't do 2000 a day. 7x Dual core 2x Quad core 2x 8 core Total 38x core's in all The 8 wu's at a time /core is rather a sensible idea - this ensures that you dont get any more until these are returned ( crunch 8 wu's get another 8 wu's ). Brilliant I thought (and got it wrong...) that it was changed from 2000 to 8 (max).And that would be your lot for 24hrs. lhc@home imposed heavy restrictions on the limit of wu's one could have in a 24hr period as they have more crunchers than wu's availiable. Anyway - besides i'm glad to have the opportunity to crunch 1 wu and to have taken part. Kind Regards, John :0) |

Campion CampionSend message Joined: 5 Oct 07 Posts: 7 Credit: 4,807,766 RAC: 0 |

You got that right! Short units running at about 6 mins per unit. Quad core that could run 4 units at a time. 12 minutes to complete a cycle of units. I don't know who set this, I don't think it is anything that I can control, but BOINC is set to contact the server 20 minutes after it realizes that host is at its 8 unit limit. So its either run other projects or have your machine idle for 8 minutes. Add being on a dial up connection and you have to log on multiple times to get more work. Its ok if you happend to be doing something online for a while, but it is becoming almost too much of a effort to keep BOINC running Milkyway@Home units to make it worthwhile / enjoyable. If anyone asks from my team about signing up for this project I would have to advise them against it at the moment. |

|

Send message Joined: 1 Sep 07 Posts: 18 Credit: 1,658,251 RAC: 0 |

Hello To all fellow crunchers here at milkyway@home 11/21/2007 02:09:28|Milkyway@home|Sending scheduler request: To fetch work. Requesting 100470 seconds of work, reporting 0 completed tasks 11/21/2007 02:09:33|Milkyway@home|Scheduler request succeeded: got 0 new tasks 11/21/2007 02:09:33|Milkyway@home|Message from server: No work sent 11/21/2007 02:09:33|Milkyway@home|Message from server: (reached per-host limit of 8 tasks) oops I forgot. edited: I only have 1 WU left and still get this message. |

DaveSun DaveSunSend message Joined: 10 Nov 07 Posts: 28 Credit: 2,549,231 RAC: 0 |

Have the other 7 results reported or have they only been uploaded? If the Tasks tab of BOINC Manager shows 8 tasks listed and 7 of them are listed as "Ready to Report" in their status then the limit of 8 still applies. Once they are reported you should get more work based on other factors for your host. |

LiborA LiborASend message Joined: 15 Sep 07 Posts: 15 Credit: 9,818,265 RAC: 0 |

Interesting! On My Windows machine I get only 8 WUs per CPU per day but on Linux machine is different situation. I joined my Linux machine today in project and yet I have 29 WUs downloaded (5 WUs from thiese was cancelled by server as redundant result - and the second interest think is that all of thiese cancelled WUs was sended to another cruncher after cancelation on my computer ) |

|

Send message Joined: 17 Nov 07 Posts: 17 Credit: 663,827 RAC: 0 |

Hello, Somethings not right i have had 2 quad cores starving - in need of wu's to crunch I set this project to run exclusively and now my machines are starving. I put them to work on another project for the time being.... Regards, John

|

|

Send message Joined: 17 Nov 07 Posts: 17 Credit: 663,827 RAC: 0 |

Hello, Upon checking server status i note that... Currently this project has run out of WU's to crunch... http://milkyway.cs.rpi.edu/milkyway/server_status.php Regards, John |

|

Send message Joined: 11 Nov 07 Posts: 41 Credit: 1,568,983 RAC: 5,193 |

Yup, it's even worse on Linux where WUs are smaller, one duallie here gets 7 min idle after every set of 8 units. I think, but don't know, that the backoff scheme is set by the server on the project, on LHC it jumps to 15 min, Einstein have 1 min and this project seem to have 7 sec: 2007-11-23 16:33:48 [Milkyway@home] Deferring communication for 7 sec |

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

Well, the reason we've got it set to a relatively low number of workunits per user is because of what we're actually doing server side. If we let people queue up 1,000 or so work unit, the majority of that work would just simply be wasted. What we're trying to do is run a genetic search to optimize parameters to fit a model of the milkyway galaxy to a portion of stars in the sky. As we get results back, the population of best found parameters gets updated, and we're always generating new parameters from the population of the best results we've found. So the newer the work units are, the better chance that they'll improve our population and help us get closer to the correct model. Basically, if 1,000 workunits are generated off the first generation of the population, and they come back to us in a day or so, chances are the population is so far evolved away from where the original 1,000 workunits were generated, that all that work was for nothing. The amount of work per workunit should be increasing however, as the model gets more complex and is evaluated over more stars. |

|

Send message Joined: 17 Nov 07 Posts: 5 Credit: 26,421 RAC: 0 |

What about increasing the limit to say 15 or 20. That increase should keep everyone running until boinc contacts the server again. |

Travis TravisSend message Joined: 30 Aug 07 Posts: 2046 Credit: 26,480 RAC: 0 |

What about increasing the limit to say 15 or 20. That increase should keep everyone running until boinc contacts the server again. I've upped the limit to 20. Hopefully this will keep things running smoothly on both your end and ours :) |

Campion CampionSend message Joined: 5 Oct 07 Posts: 7 Credit: 4,807,766 RAC: 0 |

20 sounds like a good comprimise for everyone. Thanks! |

|

Send message Joined: 15 Nov 07 Posts: 31 Credit: 56,404,447 RAC: 0 |

Me likes twenty, too! (22?) :o) Perhaps should have said twenty, also. |

Jayargh JayarghSend message Joined: 8 Oct 07 Posts: 289 Credit: 3,690,838 RAC: 0 |

20 is good however 5 per cpu would do the same and work much better for your searches as it would prevent a slow host from downloading too many with a slow return and would allow the 8 cores to queue up a little more work to make it through 20min. |

|

Send message Joined: 17 Nov 07 Posts: 5 Credit: 26,421 RAC: 0 |

Many single core CPUs can burn through 5 in less than 20 min. My G5 comes close to burning through the 8 before it contacts the server. 20 seems to be working well for me and boinc should adjust for the slower computers and not request too much work. The problem may come in as units start to get large as boinc may request for too much work. That too will adjust after a few of the longer units run. |

Jayargh JayarghSend message Joined: 8 Oct 07 Posts: 289 Credit: 3,690,838 RAC: 0 |

Many single core CPUs can burn through 5 in less than 20 min. My G5 comes close to burning through the 8 before it contacts the server. 20 seems to be working well for me and boinc should adjust for the slower computers and not request too much work. The problem may come in as units start to get large as boinc may request for too much work. That too will adjust after a few of the longer units run. Good point! Thanks Kevin....but we still have the starving 8 cores,and if you say 10 per core that would be better than 20 to a P2 or someone running big caches on many projects it would help both ways. |

Jayargh JayarghSend message Joined: 8 Oct 07 Posts: 289 Credit: 3,690,838 RAC: 0 |

Another option is to lower the time between RPC calls but I don't know if the server can handle that. ??? |

©2026 Astroinformatics Group